Humanities researchers want to analyze images that exist in text-image environments (journal articles, books, newspapers, letters) with computational tools. To do so, researchers must typically separate images from accompanying text. Researchers can manually extract images from digital text-image environments (e.g., with “screenshot,” “snipping,” and “crop” tools on Mac or PC; “crop” and “marquee” tools in Adobe and Microsoft products). Manual extraction becomes time-consuming, tedious, and prone to error for researchers who want to extract hundreds to thousands of images from hundreds to thousands of PDF files.

We are developing a Python-based image extraction tool, called PicAxe, that humanities researchers can easily apply to large corpora of diverse text-image PDF documents. Our tool automatically extracts images like diagrams, photographs, graphs, or tables, from large corpora of PDF files. The tool will be integrated into the Giles Ecosystem (Damerow et al. 2017) so researchers with no coding experience can upload large corpora of digital documents for storage, OCR, and text and image extraction . We will release the code under an open-source license and the source code will remain accessible via GitHub.

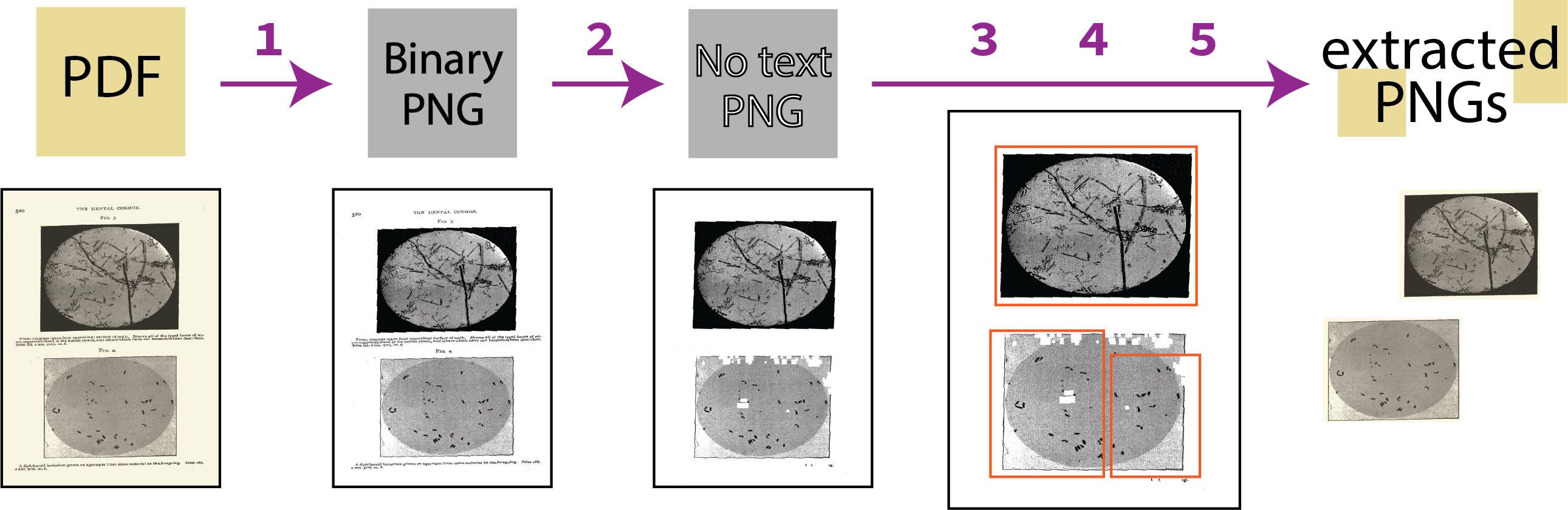

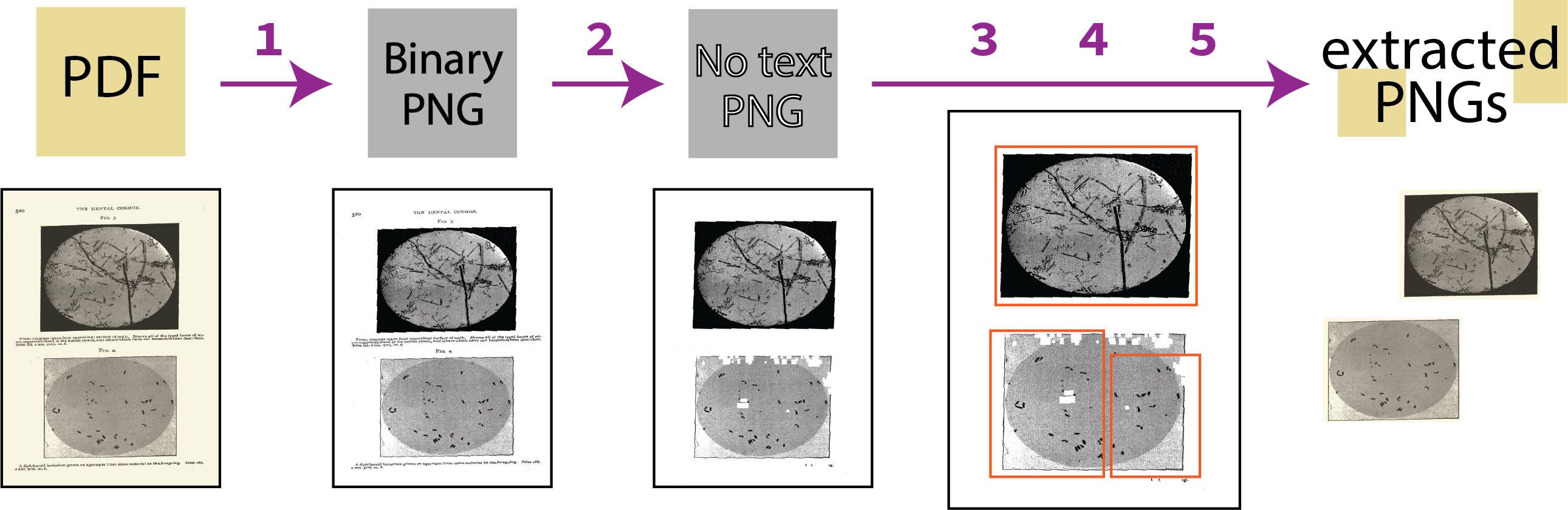

Currently, our tool (1) converts PDF file pages into individual binary .png files, (2) applies pytesseract 1 to detect and remove detected text from the .png files, (3) applies a combination of OTSU thresholding and contour detection to the text-free .png to identify boundaries of remaining image marks, (4) filters boundaries with smaller areas and combines them in order to reduce noise and identify whole individual images, (5) and extracts any images and text from the original PDF pages that fall within the identified boundaries (Figure 1). Currently, any researcher can download and use the code for the tool from GitHub ( https://github.com/acguerr1/imageextraction ).

Figure 1. PicAxe image extraction workflow (as of June 2024).

Our tool is different from existing free or open-source tools for automatic image extraction (e.g., PyMuPDF Pillow 2 and pdfplumber 3 ) in three ways:

In summary, our too aims to manage the amount of variation in extractability of image data inherent to the PDF file format and the stylistically diverse text-image environments with which humanities researchers commonly work.

So far, we tested the tool on a corpus of 286 scientific journal articles and book chapters published from 1929 to 1974 representing an early history of the microbial biofilm concept. The tool generally extracts all diagrams, photographs, and graphs successfully, and under-extracts tables, for a document-level accuracy of ~76% compared to manual extraction. Statistical data does not fully reflect the accuracy of extraction as the combination of over- and under- extraction of individual images varies widely from PDF to PDF and corpus to corpus.

We are working to improve the tool. It currently extracts ~516 images per hour and we will shorten overall extraction time. We are testing additional corpora and adding functionality, including automatically removing scanned page borders and line breaks that prevent successful image extraction. The code will also generate a .csv document with relevant metadata for extracted images. We seek input on improving the tool.