1.

Introduction

From the early 1960s to the mid-1970s, Asheville, North Carolina, underwent urban renewal, a national program aimed at modernizing "blighted" areas (Lee et al. 2017). This process, mostly impacting African-American neighborhoods, displaced families, businesses, and organizations for economic and infrastructure development. Due to its historical significance and on-going reparation efforts in many cities in the United States (Honosky 2024), understanding parcel-level information about how each property was acquired by the housing authority is one of the most important steps. This paper focuses on the East Riverside neighborhood (now Southside), the largest single area in the south-eastern U.S. affected by urban renewal. Our target documents are property acquisition documents originally produced by the Housing Authority of the City of Asheville (HACA) that details the property acquisition processes of nearly 1,000 properties in Southside. They include property's sales history, including appraisals, offers, rejections, and court cases.

However, the extensive lengths (20-200 pages per parcel) and complexity of property acquisition documents from the urban renewal era pose challenges in digital curation, particularly for handwritten documents. This is a persistent issue for digital humanities (DH) scholars. Although handwritten text recognition (HTR) platforms such as Transkribus (Kahle et al. 2017) and Google Document AI have demonstrated good performances (Ingle et al. 2019, Kiessling et al. 2019), their practical application by non-experts remains challenging.

1

This is due to additional technical efforts required to configure recognition systems, such as training for specific document types, data structuring, and cleaning processes.

In November 2023, OpenAI released GPT-4V(ision), which includes Optical Character Recognition (OCR) capabilities. Given that much of the data curation, processing, and cleaning can be managed through user-friendly prompts (i.e., chat), we aim to conduct an initial assessment of GPT-4V's effectiveness in transcribing hand-written documents from the urban renewal collection. If GPT-4V can accurately digitize hand-written documents through carefully crafted prompts, it will become a valuable tool for non-experts in transcribing historical documents on a large scale. Even if it falls short, conversely, it is still crucial to understand and discuss the implications of using Large Language Models (LLMs) for digitizing archival documents, as its performance matters to many DH scholars.

This paper evaluates GPT-4V's performance in transcribing the cover pages of selected urban renewal documents. These cover pages, all hand-written and generally more challenging to read (even for humans) compared to other parts of the documents, are valuable for researchers and practitioners who work on urban renewal, as they succinctly provide key information about property acquisition processes.

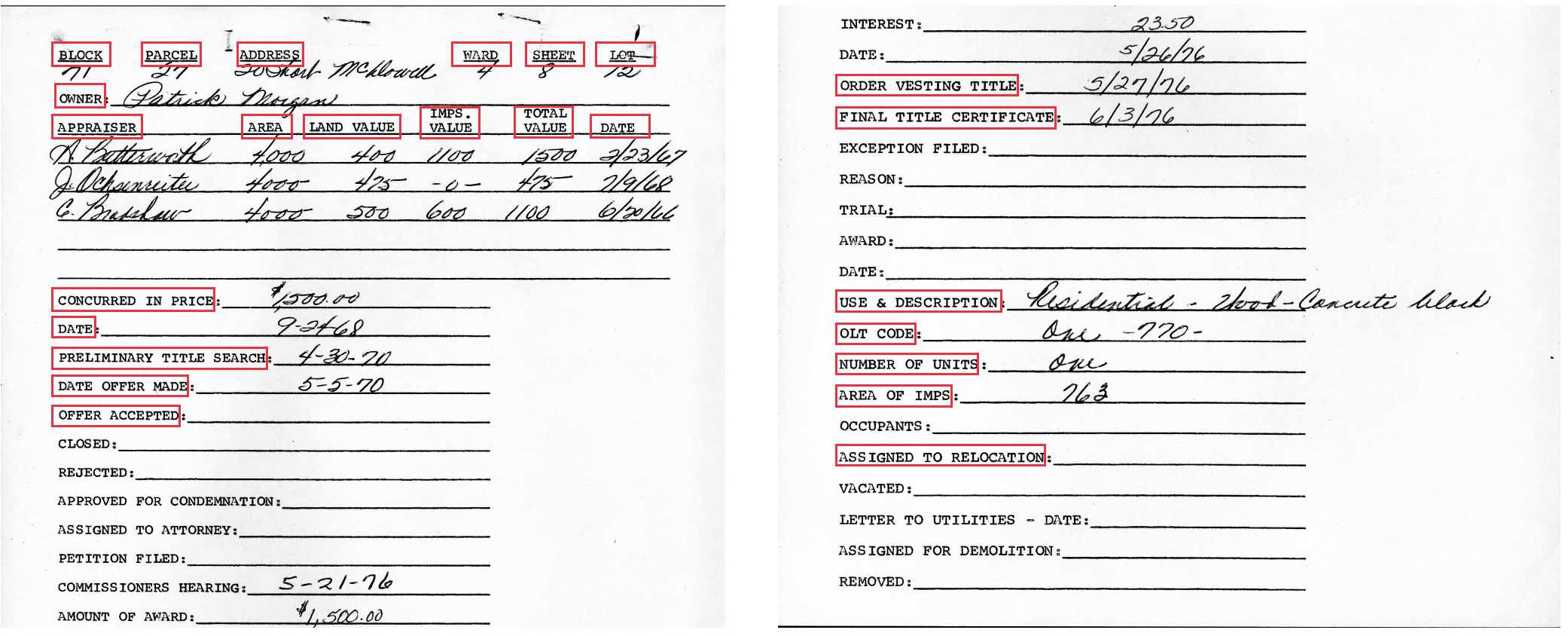

Figure 1 shows an example of original documents.

Figure 1: An example of original document. Red rectangles are our focus fields.

2.

Approach

We utilized the OpenAI APIs to make queries to GPT-4V. We employed constrained prompting techniques to instruct GPT-4V to read the text in the document (Yang et al. 2023).

2

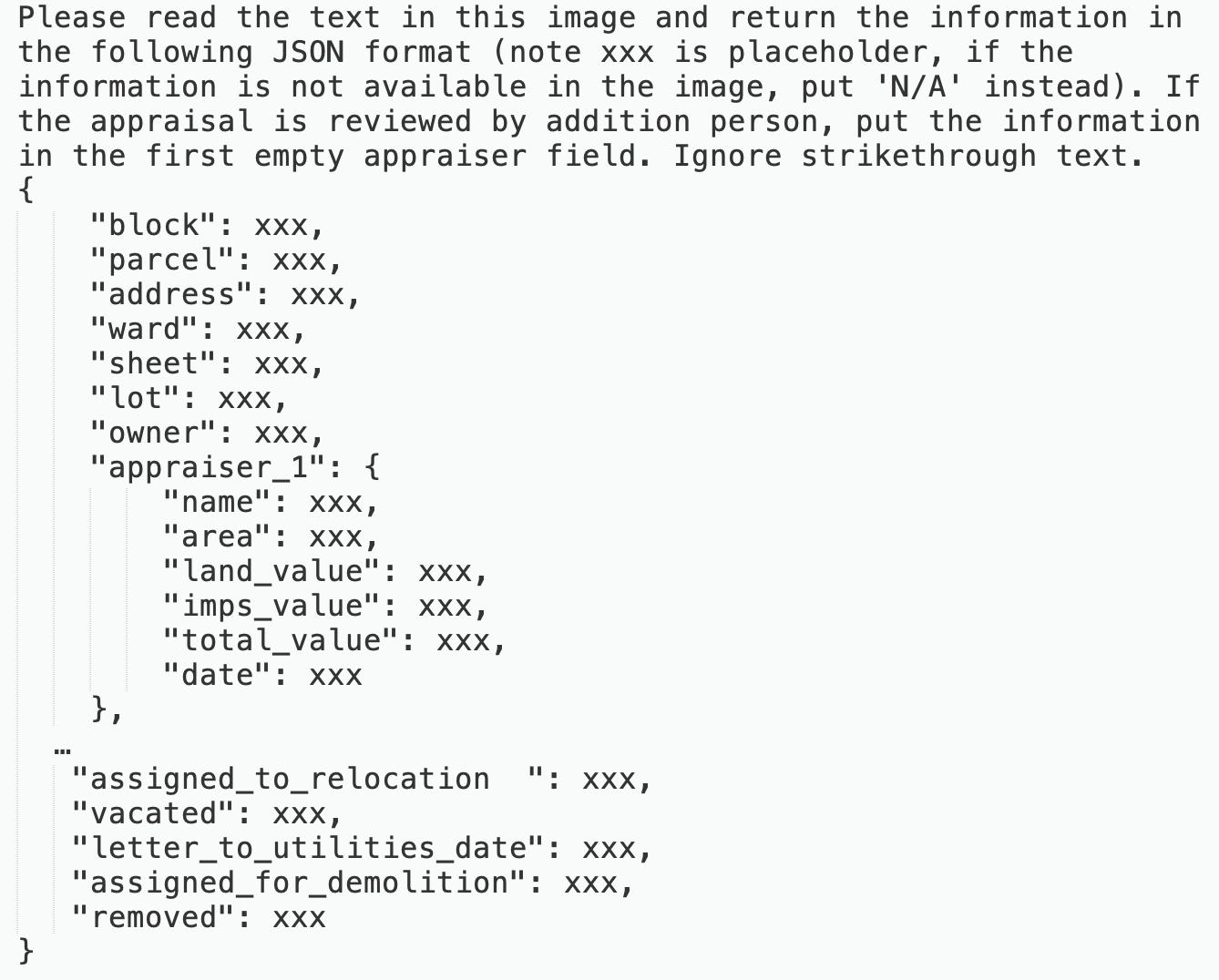

The objective was to retrieve information from individual fields and organize it into a specific JSON format. We engineered the prompt following the guideline by Yang et al. (2023). Through iterative refinements of the prompts, the constrained prompt was finalized as depicted in

Figure 2. Using this prompt, we processed 50 select documents, subsequently assessing its performance per each field.

We compared the text within the JSON data curated by GPT-4V with the ground truth data compiled by human evaluators. Given that several fields were frequently empty across most documents (e.g., Trial), our focus was on fields with values present in more than 50% of the samples (25 documents). The accuracy was calculated solely based on non-empty values. Based on the accuracy of each field's transcribed values, we qualitatively examined the characteristics of the hand-written texts to understand the strengths and weaknesses of GPT-4V.

Figure 2: Constrained prompting used for transcribing the texts.

3.

Results

The processing time for GPT-4V to read 50 documents was about 19.11 minutes.

Table 1 presents the accuracy for each individual field. The

Accuracy column of

Table 1 reports the accuracy solely based on non-empty values. Notably, the field

ward achieved the highest accuracy score of 0.8, whereas

owner obtained the lowest accuracy score of 0.18 in this evaluation. Our initial assessment suggests that, without further engineering of prompts and additional frameworks that help refine the results, GPT-4V is not at the stage of generalizable transcription tasks for hand-written texts.

To understand the reasons for the varying accuracy in transcribing tasks, we qualitatively analyzed the hand-written texts. Notably, fields that present high accuracy such as

ward and

block are more likely to provide numerical values in relatively simple forms, providing clear structures for comprehension by GPT-4V. Among the fields with more than 0.7 of accuracy are all simple numeric fields or dates, with an exception of the

use_description field. Although the

use_description field is English words, it presents relatively standardized terms, such as "Residential" or "Commercial," which may make it easy for GPT-4V to predict the values.

Conversely, fields with low accuracy, such as

owner and

appraiser_1_name, are attributed to their longer, more diverse text entries, making it challenging for GPT-4V to extract the terms accurately. Finally, we identified consistent misreads of GPT-4V, such as a confusion between "2" and "7," "1" and "7," "0" and "6," and "F" and "E". These recurring errors illustrate challenges faced by the model in distinguishing specific numerical and alphabetical characters.

Table 1: Accuracy of each field in the cover page of the urban renewal collection.

| Field |

Accuracy |

# of empty cells

|

| ward |

0.80 |

0 |

| block |

0.76 |

0 |

| appraiser_1_land_value |

0.74 |

0 |

| order_vesting_title |

0.74 |

8 |

| use_description |

0.74 |

8 |

| sheet |

0.72 |

0 |

| appraiser_1_total_value |

0.72 |

0 |

| date_offer_made |

0.69 |

8 |

| offer_accepted |

0.68 |

25 |

| final_title_certificate |

0.67 |

8 |

| appraiser_2_total_value |

0.63 |

1 |

| preliminary_title_search |

0.63 |

4 |

| parcel |

0.62 |

0 |

| appraiser_2_land_value |

0.61 |

1 |

| appraiser_1_imps_value |

0.60 |

25 |

| appraiser_1_date |

0.59 |

1 |

| concurred_in_price_date |

0.59 |

6 |

| assigned_to_relocation |

0.58 |

14 |

| appraiser_3_date |

0.57 |

8 |

| appraiser_3_total_value |

0.57 |

6 |

| lot |

0.56 |

0 |

| concurred_in_price |

0.54 |

2 |

| appraiser_1_area |

0.54 |

0 |

| appraiser_2_date |

0.53 |

1 |

| appraiser_2_area |

0.51 |

1 |

| appraiser_3_name |

0.41 |

6 |

| number_of_units |

0.41 |

23 |

| appraiser_2_name |

0.39 |

1 |

| olt_code |

0.31 |

15 |

| address |

0.30 |

0 |

| area_of_imps |

0.29 |

22 |

| appraiser_1_name |

0.22 |

0 |

| owner |

0.18 |

0 |

4.

Discussion

Our findings offer insights into the general use of LLMs like GPT-4V to transcribe handwritten documents, particularly historical ones. Because LLMs exhibit varied performance in different types of texts, further examination is necessary for their application in transcribing handwritten texts. However, they may be suitable for simple tasks, as they tend to better recognize numerical and simple texts.

Our research suggests potential directions for future research. First, since GPT-4V often misreads certain characters (e.g., confusing ‘1’ with ‘7’), these patterns could be integrated into prompt design recursively. Second, for text-based fields, options could be recursively narrowed down through trial and error, potentially incorporating human oversight. Lastly, considering the diverse characteristics and performances of LLMs across different handwriting styles, an ensemble approach combining multiple LLM tools could be developed to enhance transcription accuracy.

5.

Acknowledgments

We thank Ms. Priscilla Robinson and Dr. Richard Marciano for their continuing collaboration and the scanning of the archival documents.