In the digital economy, data has become a crucial factor of production, generating immense regenerative value for society and presenting new challenges and opportunities for cultural development. In 1992, the United Nations Educational, Scientific and Cultural Organization (UNESCO) initiated the Memory of the World Programme. This project established the Memory of the World Register, accomplishing the organization and promotion of global memory heritage, particularly valuable documentary heritage. It facilitated the rapid dissemination of the concept of archives documentary heritage among national governments, non-governmental organizations, and the public worldwide. Archives documentary heritage inherits the memory storage value and evidential value of archival resources. It is not only a crucial component of the cultural data system but also possesses a unique function in constructing and preserving collective memory. It provides a reliable data source for telling cultural stories.

Data storytelling is the process of allowing data to speak for itself, informing, explaining, persuading, or captivating the target audience (Kelliher and Slaney,2012). It emphasizes the use of narrative elements to convey data analysis insights. Processed data stories, with their low cognitive thresholds and engaging experiences, enhance cultural dissemination and information retention. Based on the fundamental elements of the storytelling process, including Data, Visuals, and Narrative (Dykes,2019), scholars worldwide have conducted extensive theoretical model exploration and research, such as the inverted pyramid, Martini glass, and SUCCESs. As data types exhibit a trend towards diversification, current research in data storytelling tends to emphasize narrative structure and how technology is applied. Conversely, during the extraction, generation, and visualization processes of storytelling materials, there is a notable absence of thorough pre-control processes, such as data screening and result verification. This deficiency may impact the credibility and integrity of stories.

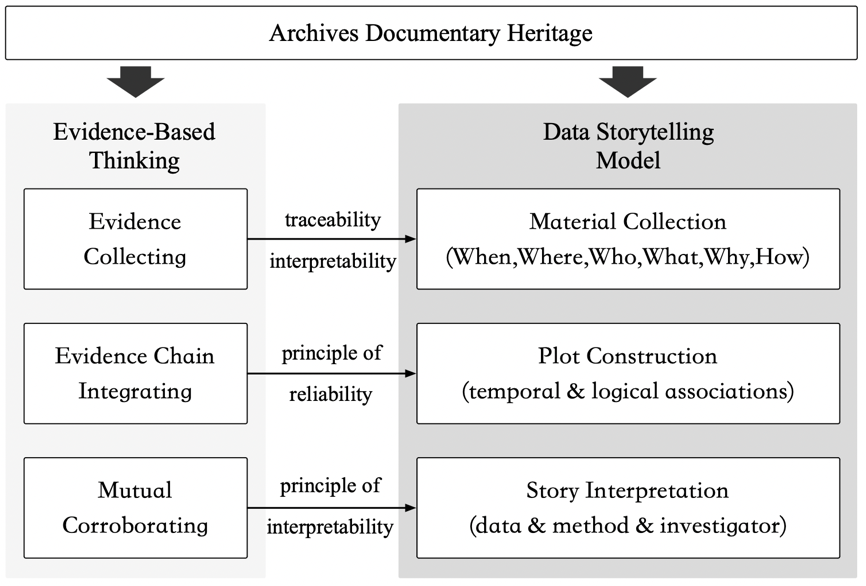

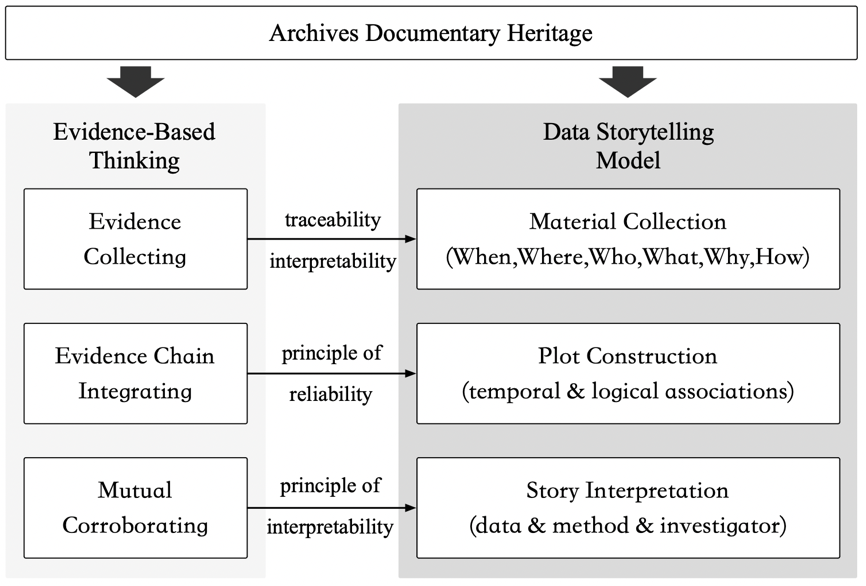

To bridge the gap between data and data stories and to narrate high-quality cultural stories, we propose the incorporation of evidence-based thinking to optimize the archives documentary heritage data storytelling model. Evidence-based thinking is a critical methodology in digital humanities research, emphasizing that new research conclusions should be based on objective facts from original documents. It follows the rule of deducing evidence chains and reconstructing real events by tracing clues. Commencing from acquiring data evidence, overseeing the story generation process, and transforming storytelling into an evidence chain construction and deduction process ensures the traceability, reliability, and interpretability of data storytelling, with the objective of conveying authentic information to the greatest extent possible.

Figure 1

In the context of evidence-based thinking, the data storytelling of archives documentary heritage aims to ensure that each step in the narrative formation adheres to the evidence obtained. Therefore, a data storytelling model for archives documentary heritage must go through three stages: evidence collecting, evidence chain integrating, and mutual Corroborating, constructing a set of evidence chains that can prove the authenticity of the story. Firstly, it upholds the principle of traceability in material collection , extracting complete narrative elements, inter-event relationships, and original resource metadata descriptions. It ensures a traceable process of evidence generation, transmission, and storage, immune to tampering. It also guarantees the reliability and effectiveness of connections between evidence and facts or other evidence, thereby substantiating the objective existence of historical events. Secondly, it adheres to the principle of reliability in plot construction . Guided by strong relational connections, materials are organized to preliminarily generate a sequence of events based on temporal associations, clarifying the developmental trajectory of the story. Text mining and semantic analysis techniques are then employed to supplement causes and effects based on logical associations, linking multiple evidence chains to reconstruct the intricacies of the story. Then appropriate narrative structures, visualization tools, and perspective effects are chosen to present the story content to users. Thirdly, it adheres to the principle of interpretability in story interpretation . Utilizing a multi-faceted approach, it employs data triangulation, method triangulation, and investigator triangulation (Malamatidou,2017) to elucidate the temporal context and value impacts of the story, providing endorsements for the authenticity of the story at each narrative stage and ensuring comprehensive evidence support.

In order to verify the feasibility and superiority of our constructed model, we take the Chinese silk archive as a case object, and establish a historical evidence chain based on the silk archive to prove the development and change of silk processing technology as well as the story of silk civilization in Chinese history.