Abstract

Crowdsourcing systems hold promise for collaborative knowledge advancement, vital across areas like digital humanities. Gamification approaches offer potential benefits for increasing user participation; however, potential negative behavioral effects call for a measured approach. This research examines the relationship between crowd types, scoring system designs and resulting contribution patterns in a gamified crowdsourcing platform. We aim to provide empirical insights on designing scoring incentives that encourage high-quality participation across diverse crowd groups in a responsible manner.

Specifically, we assess participation and quality outcomes between an exponential scoring system that offers accelerating unpredictable rewards versus predictable linear point schemes. Outcomes are compared across crowds of subject-matter experts, student groups and general public participants. Findings highlight the benefit from careful matching between the incentive design, crowd type and the desired knowledge sought.

Background and Theory

Crowdsourcing is a rapidly growing method in digital humanities for engaging the public via tasks that generate valuable data around cultural heritage collections, archives, annotated databases, and other digital collections initiatives (Beer / Burrows 2013; Bocanegra Barbecho / Ortega Santos 2023). Crowdsourcing enables breaking away from reliance on small circles of experts by coordinating large public groups for problem-solving and value creation (Beer / Burrows 2013; Richter et al. 2018). However, integrating such systems faces challenges regarding maintaining motivation for participation and obtaining quality input (Cooper et al. 2010; Majchrzak et al. 2013; Richter et al. 2019). Gamification has emerged as a promising approach to address these crowdsourcing challenges by incorporating user-friendly tools and positive reinforcements like feedback and virtual rewards, which can increase user engagement (Deterding et al. 2011; Nahmias et al. 2021). However, while these gamification techniques show potential, their wider social impacts warrant further research (Koivisto / Hamari 2019; Mulliken / Kreymer 2023; Richter et al., 2019). Carefully designed incentives that consider social impacts and take into account different crowds and knowledge creation are increasingly important (Majchrzak et al. 2013).

This research presents a novel approach and empirical insights on participation variances between incentive models, addressing an under-exploration topic. We explore the complex relationship between crowdsourced groups, scoring system designs and resulting contribution patterns, to inform reimagining more sustainable collective intelligence platforms. Specifically, the study examines variants between exponential scoring schemes that offer accelerating, unpredictable reward progressions versus predictable linear point allocations. Participation and quality outcomes are systematically contrasted across subject-matter experts, student groups and general public participants.

Methods and Findings

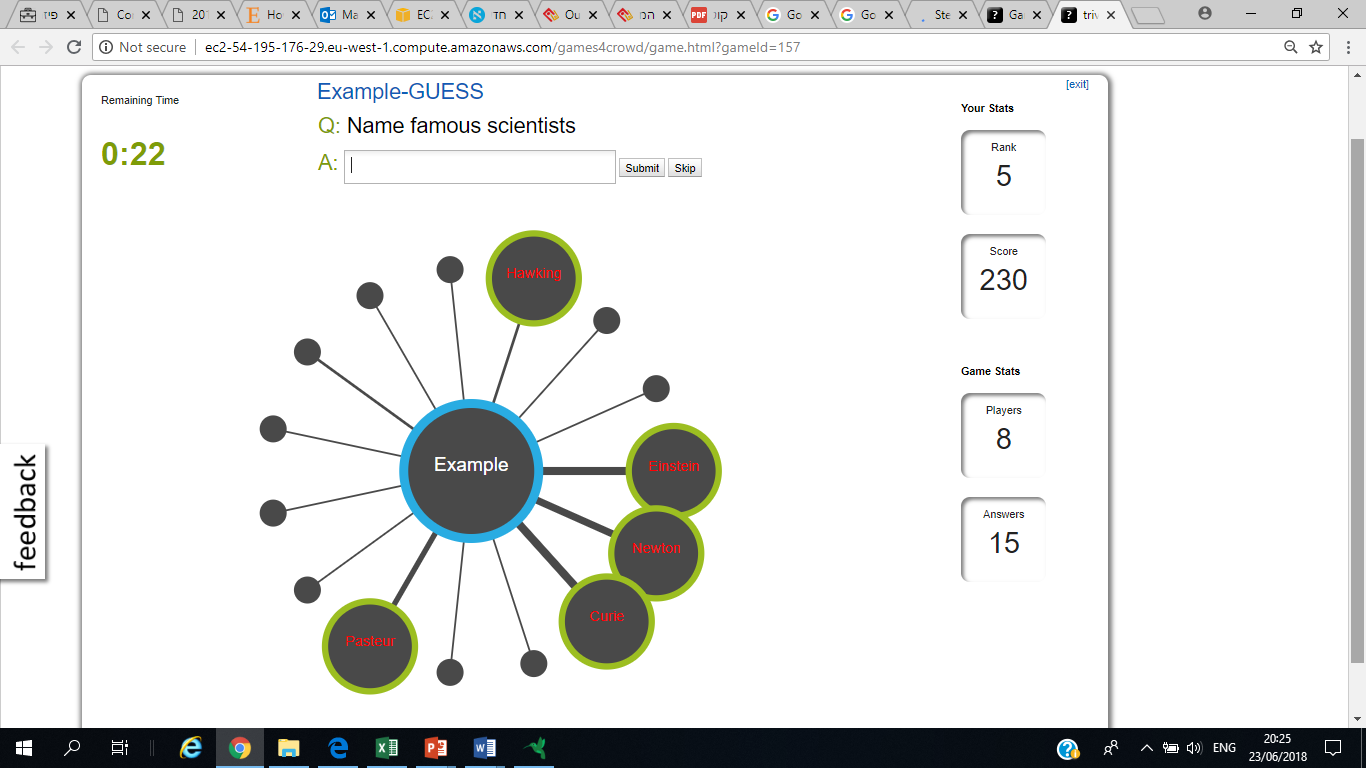

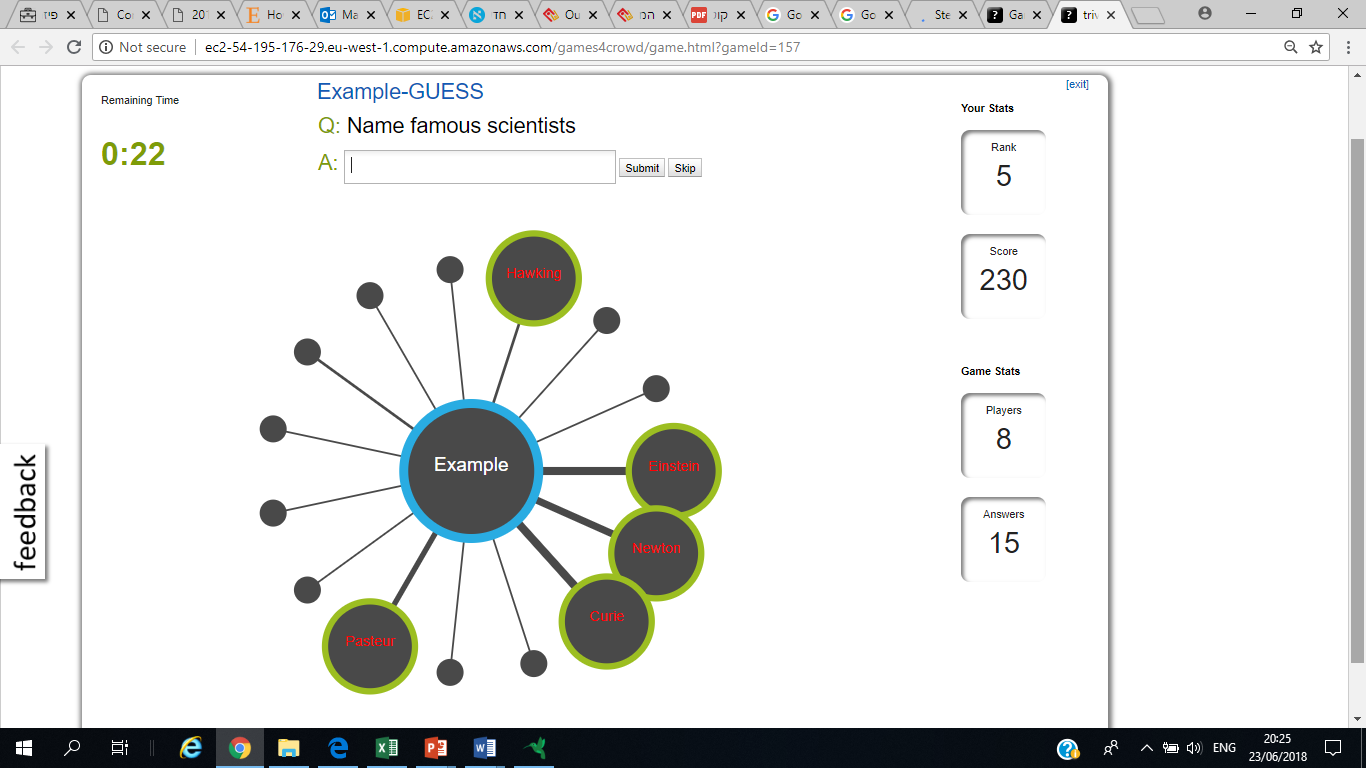

An online question-prompting platform was gamified using a scoring wizard to implement variable point reward schemes (Fig.1). We examined participation and contribution differences between exponential and linear scoring schemes across 393 users from various crowdsourced groups. Analysis combined usage metrics from server logs regarding participation, duration, and quantity of contributions. Quality analysis focused on the relevance and usefulness of participant responses .

Fig.1. Game interface

Data was analyzed using a hierarchical linear modeling (HLM) approach to compare scoring systems across the crowd clusters. Results showed exponential scoring drove higher response volumes yet also increased tangential content from students, while subject-matter experts provided top quality consistently.

Further, although exponential conditions led to higher noise rates compared to the linear scheme in public, findings indicate we can shape quality behavior among general students by incentivizing a reduced low-quality rate via the exponential scheme. Surprisingly, mobilizing a crowd of experts proves more difficult than other groups, with shorter participation duration and lower response rates.

Overall, matching incentive design with audience and knowledge type emerges as beneficial. Findings highlight nuances in calibrating incentives to crowd demographics and goals.

Insights and Applications

Technology’s central role amidst the COVID-19 pandemic highlights the need to better understand the social effects of digital participation platforms. The findings illustrate how subtle design decisions can significantly shape collective user behaviors and knowledge sharing patterns. These insights are important for digital humanities, as responsibly architecting crowd-based knowledge sharing platforms ties directly to furthering fields like cultural heritage advancement through equitable collective intelligence.

The response patterns demonstrate dependencies between incentive architectures and crowd diversity which warrant further research. The insights from this study can aid future crowd-based systems by tailoring motivational structures and incentives to the needs of different crowd types as well as the intended knowledge goals.